Build a Job

Our video shapes tool allows users to create a video annotation job with a custom ontology.

Data

Data preparation for a Video Object Tracking job is simple but has a few key aspects outlined below to ensure the data is processed correctly and is able to be annotated. In order to get started here is what you’ll need:

Source Data

The source data can be either a video, or a list of frames. When using videos as source data, the video files should be:

- hosted and publicly viewable.

- in MP4 or AVI format

- less than 2gb or 35 minutes in duration.

-

broken into workable-sized rows

- We recommend as a best practice to have about 100 frames per video. Depending on your frame rate this will be between 3 and 10-second clips per video.

Source file

-

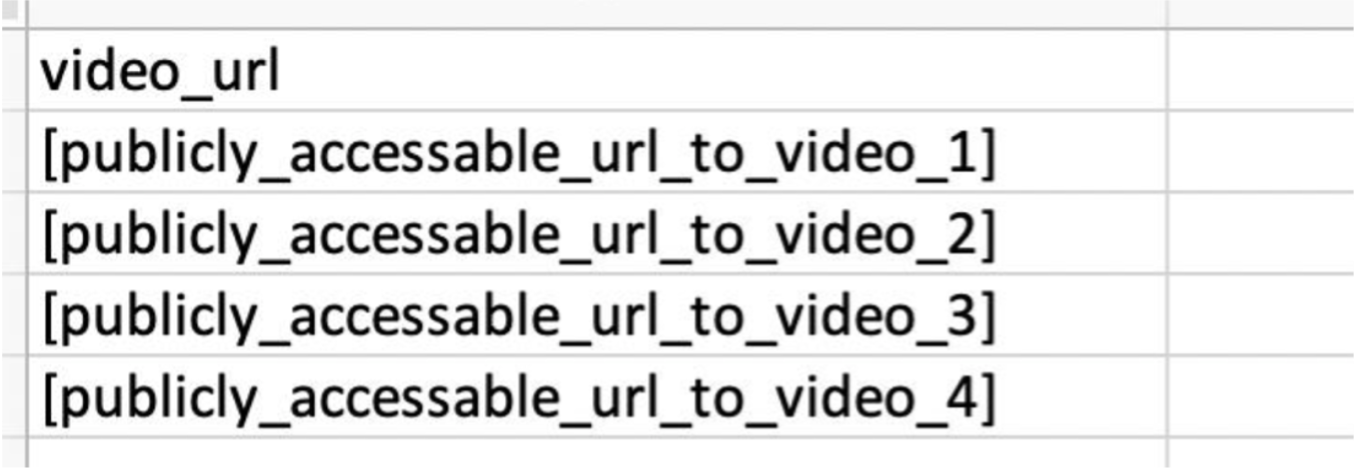

The source file should have least one column with the link to the source data to be annotated with a column header (Ex. “video-url” or “frames”)

- As needed, you can pass any other metadata along as columns.

Here is an example source file:

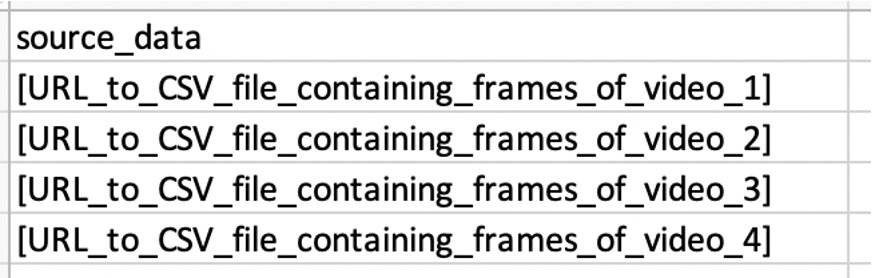

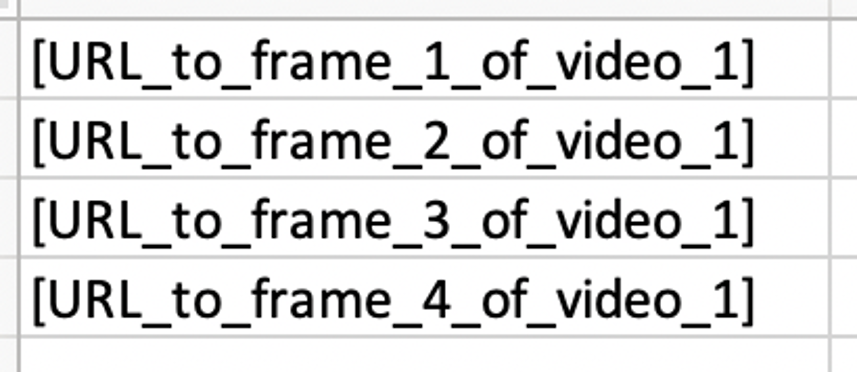

When using frames as source data, instead of using an URL of the video file, you would be using an URL of a CSV file containing a list of URLs each pointing to a single frame. The linked CSV file should contain 1 URL per line and doesn’t need a header.

Here is an example source file:

Here is an example csv file containing frames of a video file:

Note: Secure Data Access is supported if using frames as source data. Please contact your Customer Success Manager to set up SDA for your video object tracking job.

Instructions

-

We recommend including an instructional video

- Creating a video will help you understand the tool and discover some of the edge cases in your data. It will also give contributors context on how the videos should be annotated.

-

Provide guidance on how the tool works

- The video annotation tool has built in features to help contributors annotate more efficiently. This includes a full menu of hotkeys and tooltips. Feel free to copy paste these tips into your instructions:

| Function | Hotkey | Tooltip |

| Pan | Hold Spacebar | Pan (spacebar) |

| Zoom In | + | Zoom In (+) |

| Zoom Out | - | Zoom Out (-) |

| Reframe | r/R | Reframe (R) |

| Focus Mode | f/F | Focus Mode (F) |

| Hide Mode | h/H | Hide Mode (H) |

| Show Fullscreen | e/E | Minimize/Expand (E) |

| Play/Pause | p/P | Play/Pause (P) |

| Previous Frame | ← | Prev Frame (←) |

| Next Frame | → | Next Frame (→) |

CML

CML for a Video Object Tracking job is available to our Enterprise Appen users. Please contact your Customer Success Manager for access to this code. The product is in BETA, so please consider the following:

- The job needs to be designed in CML and there is currently no graphical editor for this tool

- Launching this job requires one of our trusted video annotation channels. Please reach out to your Customer Success Manager to set this up.

- Test Questions are not supported

- Aggregation is not supported

- If you need any help, don’t hesitate to reach out to the Appen Platform Support team.

Parameters

Below are the parameters available for the cml:video_shapes tag.

name: the name of your output column-

source-data: the column header containing the video or set of frames to be annotated -

disable_image_boundaries: allows shapes to be drawn outside the image boundary-

when

disable_image_boundaries="true"shapes can be dragged outside the image boundaries and the output will contain negative values - the default setting for

disable_image_boundariesis "false"

-

when

-

use-frames: default to “false”. If set to “true”, the tool would be expecting a URL pointing to a list of frames as source data. -

assistant="linear_interpolation","object_tracking", or"none":-

There are two different types of machine assistance to create annotations:

-

Object Tracking is ideal when:

- The camera is moving

- Note: This is only available for bounding boxes. If any other shapes are being used, your job must use Linear Interpolation.

-

Linear Interpolation is ideal when:

- The objects are moving in a linear fashion

- The camera is stationary

-

The objects being tracked are small and often change in size

- Configure

assistant="none"when no interpolation between frames is desired. This may be helpful for a review or QA job.

-

Object Tracking is ideal when:

-

There are two different types of machine assistance to create annotations:

-

type: accepts a comma-separated array of any of the four shapes types.- Example: type="['box','polygon','dot','line', 'ellipse']

-

review-data: This is an optional parameter that will be the column header containing pre-created annotations for a video. The format must match the output of the video shapes tool (JSON in a hosted URL). task-type: Please set task-type=”qa” when designing a review or QA job. This parameter needs to be used in conjunction with review-data. See [this article] for more details.-

require-views: This is an optional parameter that accepts 'true' or 'false'- If 'false', contributors are not required to view every frame of the video before submitting.

-

allow-frame-rotation(optional):- Accepts

trueorfalse - If

true, contributors can rotate the video frames within the video annotation tool. Contributors click a toolbar icon to turn on a rotation slider that can be used to adjust rotation angle from 0 to 359 degrees. Contributors do not have to manually rotate every frame; rotation angle is linearly interpolated between manually rotated frames. Interpolation happens between manually rotated frames; frames after the last manually rotated frame inherit its degree of rotation

- Accepts

- Defaults to

falseif attribute not present.

Figure 1: Demonstration of video frame rotation interpolation. The contributor manually rotates frame 7, and frames 2–6 are machine rotated, interpolating the relative rotation between frames 1 and 7.

-

Shape Type Limiter

-

Limit which shapes can be used with certain classes

-

Limit which shapes can be used with certain classes

-

Min/Max instance quantity

-

Configure ontologies with instance limits

- Comes with the ability to mark the class as not present for long tail scenarios. This information will be added to the output as well.

-

Configure ontologies with instance limits

-

Customizable Hotkeys

- Hotkeys can be assigned to classes by the user. Hotkeys cannot conflict with any other browser or tool shortcuts.

Ontology

- You will also need to create or upload an ontology of at least one class. To do this, navigate to the Design>Ontology Manager page. See Guide To: Ontology Manager for more detail.

- Ontology attributes are also supported for the cml:video_shapes tool. Please see this article for more detail Guide To: Running a Job with Ontology Attributes.

Settings

-

Necessary settings:

- 1 row per page

- 1 judgment per row

-

At least 3 hours per assignment

-

This can be set via the API using the following command or by contacting the Appen Platform Support team.

- Set Time Per Assignment

- curl -X PUT --data-urlencode "job[options][req_ttl_in_seconds]={n}" "https://api.appen.com/v1/jobs/{job_id}.json?key={api_key}"

-

This can be set via the API using the following command or by contacting the Appen Platform Support team.

Monitoring and Reviewing Results

As this is a BETA feature, aggregation is not supported. It is recommended to use a trusted partner. Contact your CSM to set up a managed crowd or reference this article to use an internal crowd.

Results

The output data of a video bounding box job is linked in the output column, the name of which is configurable.

The link will point you to a JSON file with results for the corresponding video. As long as the contributor was able to annotate the video, the output JSON will contain keys for `shapes`, which stores information for every object in the video that doesn't change over frames; `ontologyAttributes`, which stores ontology attribute question information that doesn't change over frames; and `frames`, which stores all information that changes over frames. Object coordinates and metadata are not stored for frames in which the object was marked "hidden". The schema is:

{

ableToAnnotate: true | false,

annotation: {

shapes: { // Holds the instance information that doesn't change

[shapeId]: { category, report_value, type, former_shapes }

// ...

},

frames: { // Holds frames information, and shape instance information that changes

[frameId]: {

frameRotation: 0 - 359,

rotatedBy: 'machine' | 'human',

shapesInstances: { // Holds instance information that changes(coordinates, dimensions, ontology answers/metadata). Only visible shapes are present.

[shapeId]: { x, y, w, h, annotated_by, metadata }

// ...

}

}

// ...

},

ontologyAttributes: { // Holds ontology attributes questions information that doesn't change

[ontologyName]: {

questions:[{ id, type, data }, ...]

}

},

}

}The attributes are:

- Type: This is the format of annotation, in this case, “box”

- ID: The Unique and Persistent ID for the boxed object

- Category: This is the class of the object from your ontology

- Annotated_By : This field describes whether the box in this frame is an output of our machine learning (“machine”) or was adjusted / correct by a person (“human”)

- X / Y: The top left corner of the box in question’s pixel coordinate X and Y

- Height / Width: The height and width of the box in pixels

- Visibility: This will be “visible” when the object is in view and “hidden” when the object is hidden or out of frame.

Considerations When Using Videos as Source Data

Unlike a typical Appen job, once the job is launched we will pre-process the video data linked in the job. While this is occurring for each row the row will be in state “preprocessing” before becoming “judgable”.

If the unit cannot be preprocessed it will be automatically canceled. This is to prevent contributors from seeing a broken tool and collecting annotations on incorrectly formatted data. Some common reasons a video row may be canceled are:

- The video file is too large or contains too many frames

- The URL provided does not lead to a visible video file - either the permissions are incorrect or the file is otherwise corrupted

If this occurs and you’re able to identify and correct the issue you can re-upload the video and order a judgment on the new rows.

Note: Preview of the job and tool will not work prior to launch. The frames need preprocessing before they can be loaded and as a result, processing will not begin until the job is launched.