Overview

As part of the Pre-labeling (Beta) feature, Appen offers various machine learning models available in the model template library to help provide initial "best-guess" hypotheses to an annotation project. This feature is helpful to specialized annotation projects as providing a contributor with model-predicted annotation hypotheses can dramatically cut down annotation time while maintaining and improving annotation quality. Below you can learn more about the models available in the model template library. See this article for information about adding your own custom templates.

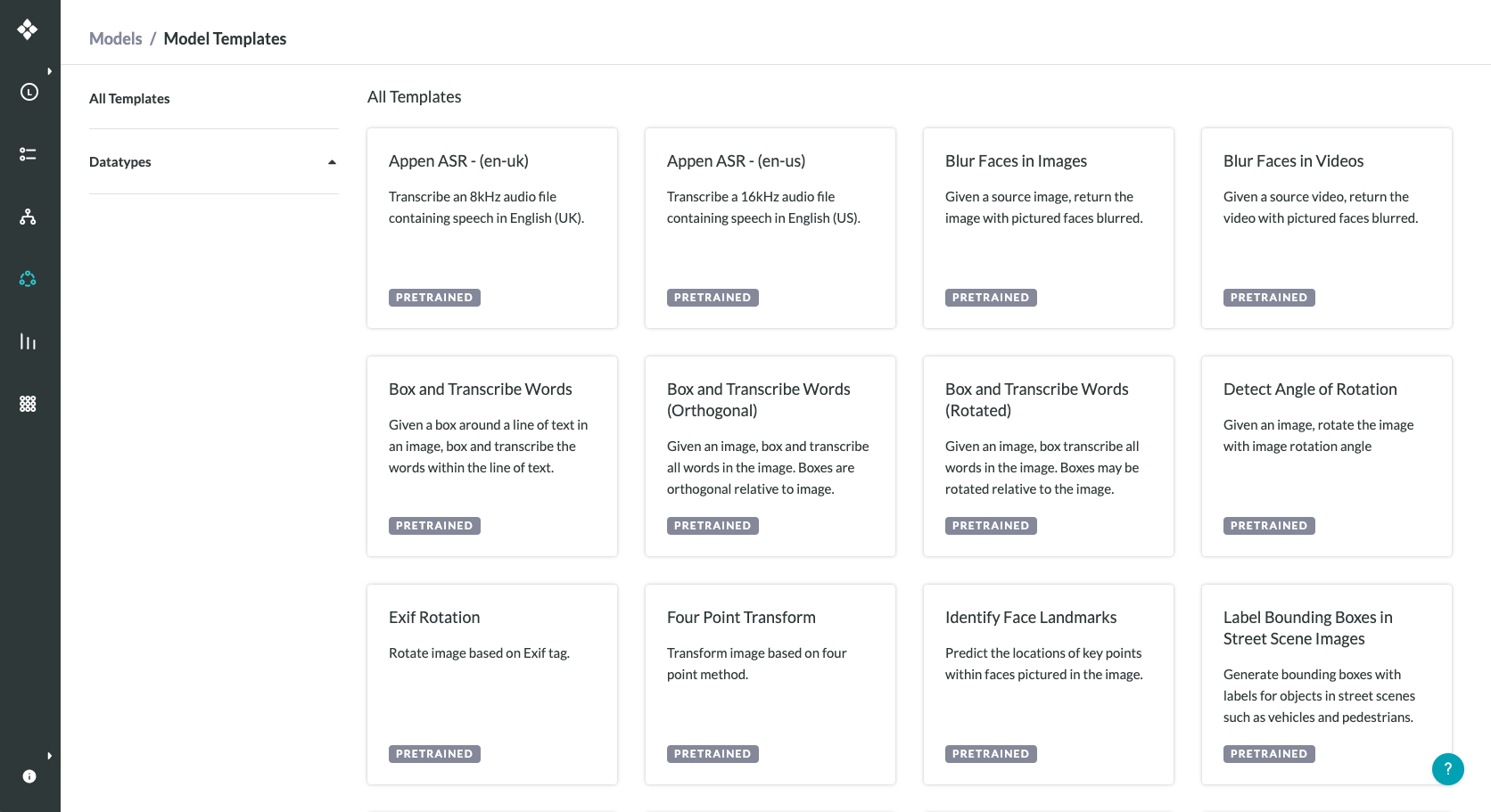

Fig. 1: Model Template Library

Important note: This feature is part of our managed services offering; contact your Customer Success Manager for access or more information.

Blur Faces in Images

This model accepts URLs of images and returns URLs pointing to copies of the source images with any pictured faces blurred.

- The face encoding model uses Adam Geitgey’s face recognition library, which is built using dlib’s face recognition model.

- It was trained on a dataset containing about 3 million images of faces grouped by individual.

- The model achieves an accuracy of 99.38% on the standard Labeled Faces in the Wild dataset, which means that given two images of faces, it correctly predicts if the images are of the same person 99.38% of the time.

- To learn more about the model, please read this blog post.

Box and Transcribe Words

This model is designed to be used as part of a document transcription workflow with the following steps:

- A contributor draws bounding boxes around lines of text in an image

- Given a bounding box around a line of text, the model predicts the bounding box coordinates and transcriptions corresponding to each word in the line of text

- A contributor reviews the model’s predictions

In addition to input columns for the images and the text-line bounding boxes, this model also takes an input for the language in which to make the transcription prediction. The values accepted are two-letter ISO 639-1 language codes.

- The following language codes are accepted:

- '

af': 'Afrikaans', 'ar': 'Arabic', 'cs': 'Czech', 'da': 'Danish', 'de': 'German', 'en': 'English', 'el': 'Greek', 'es': 'Spanish', 'fi': 'Finnish', 'fr': 'French', 'ga': 'Irish', 'he': 'Hebrew', 'hi': 'Hindi', 'hu': 'Hungarian', 'id': 'Indonesian', 'it': 'Italian', 'jp: 'Japanese', 'ko': 'Korean', 'nn': 'Norwegian', 'nl': 'Dutch', 'pl': 'Polish', 'pt': 'Portugese', 'ro': 'Romanian', 'ru': 'Russian', 'sv': 'Swedish', 'th': 'Thai', 'tr': 'Turkish', 'zh': 'Chinese', 'vi':'Vietnamese', 'zh-sim': 'Chinese (Simplified)', 'zh-tra': 'Chinese (Traditional)'

- '

- The underlying optical character recognition (OCR) model is the Tesseract Open-Source OCR Engine.

- The model is designed to recognize printed text and is unlikely to work well on handwriting.

- Please refer to the model documentation for further support.

Identify Face Landmarks

This model accepts URLs of images and returns json strings containing the coordinates of predicted facial landmarks.

- The face encoding model uses Adam Geitgey’s face recognition library, which is built using dlib’s face recognition model.

- It was trained on a dataset containing about 3 million images of faces grouped by individual.

- The model achieves an accuracy of 99.38% on the standard Labeled Faces in the Wild dataset, which means that given two images of faces, it correctly predicts if the images are of the same person 99.38% of the time.

- To learn more about the model, please read this blog post.

Label Pixels in Street Scene Images

This model generates pixel-level semantic segmentation masks for street scene images, such as images containing cars, trucks, buildings, pedestrians and signs. It can be useful for enhancing annotation efficiency and quality for autonomous vehicle and related use cases.

- The underlying model used is the High-Resolution Networks (HRNet) semantic segmentation model.

- It is trained on the Cityscapes dataset and achieves a mean intersection-over-union of 0.82.

- Download ontology

Segment Audio

This model can be used to classify periods of time in audio according to the sound within it; pre-labeling with this model can streamline audio segmentation and transcription workflows. The model segments audio into the classes “speech”, “music”, “noise” and “silence”. It accepts audio files and returns the class labels, start times and end times of the identified segments.

- This model uses the SpeechSegmenter library that has been trained on INA’s Speaker Dictionary.

Appen Automatic Speech Recognition (ASR) - (en-us)

This model is trained to provide hypothesized transcription of the input speech audio. The input speech audio should be US English sampled at 16 kHz.

- This model and ASR service were developed based on the Kaldi toolkit.

- This model was trained using Librispeech corpus and Appen's OTS US English ASR corpora.

- This model can achieve Word Error Rates of 5.01% on a LibriSpeech “clean” test set; 14.49% on a LibriSpeech test set containing “more challenging” speech; 4.92% on an Appen OTS USE-ASR001 test set (reading sentences); and 23.18% on an Appen OTS USE-ASR003 test set (conversational speech in talk shows)

- Variation in acoustic properties of the input audio such as noise level, channel type, accent, and speaking style (speaker overlap) may affect the accuracy negatively.

Appen Automatic Speech Recognition (ASR) - (en-uk)

This model is trained to provide hypothesized transcription of the input speech audio. The input speech audio should be UK English sampled at 8 kHz.

- This model and ASR service were developed based on the Kaldi toolkit.

- This model was trained using Appen's OTS UK English ASR corpora, Voxforge UK English, and OpenSLR-83.

- This model can achieve a Word Error Rate of around 20% on call center conversational UK English speech test data.

- Variation in acoustic properties of the input audio such as noise level, channel type, accent, and speaking style (speaker overlap) may affect the accuracy negatively.

Box and Transcribe Words (Orthogonal)

- This model can be used to predict bounding boxes and transcriptions for all words in an image.

- Predicted bounding boxes are always orthogonal to the image edges.

- Bounding box and transcription predictions come from an instantiation of the Tesseract OCR model, which is designed to recognize printed text and is unlikely to work well on handwriting.

- In addition to an input column for the image, this model also takes an input for the language in which to make the transcription prediction. The values accepted are two-letter ISO 639-1 language codes. The following language codes are accepted:

- '

af': 'Afrikaans', 'ar': 'Arabic', 'cs': 'Czech', 'da': 'Danish', 'de': 'German', 'en': 'English', 'el': 'Greek', 'es': 'Spanish', 'fi': 'Finnish', 'fr': 'French', 'ga': 'Irish', 'he': 'Hebrew', 'hi': 'Hindi', 'hu': 'Hungarian', 'id': 'Indonesian', 'it': 'Italian', 'jp: 'Japanese', 'ko': 'Korean', 'nn': 'Norwegian', 'nl': 'Dutch', 'pl': 'Polish', 'pt': 'Portugese', 'ro': 'Romanian', 'ru': 'Russian', 'sv': 'Swedish', 'th': 'Thai', 'tr': 'Turkish', 'zh': 'Chinese', 'vi':'Vietnamese', 'zh-sim': 'Chinese (Simplified)', 'zh-tra': 'Chinese (Traditional)'

- '

- Please refer to the model documentation for further support.

Box and Transcribe Words (Rotated)

- This model can be used to predict bounding boxes and transcriptions for all words in an image.

- Predicted bounding boxes can be rotated relative to the image edges.

- Bounding box predictions come from an instantiation of the Character-Region Awareness for Text Detection (CRAFT) model, which performs well on arbitrarily oriented or deformed text.

- Transcription predictions come from an instantiation of the Tesseract OCR model, which is designed to recognize printed text and is unlikely to work well on handwriting.

- In addition to an input column for the images, this model also takes an input for the language in which to make the transcription prediction. The values accepted are two-letter ISO 639-1 language codes. The following language codes are accepted:

- '

af': 'Afrikaans', 'ar': 'Arabic', 'cs': 'Czech', 'da': 'Danish', 'de': 'German', 'en': 'English', 'el': 'Greek', 'es': 'Spanish', 'fi': 'Finnish', 'fr': 'French', 'ga': 'Irish', 'he': 'Hebrew', 'hi': 'Hindi', 'hu': 'Hungarian', 'id': 'Indonesian', 'it': 'Italian', 'jp: 'Japanese', 'ko': 'Korean', 'nn': 'Norwegian', 'nl': 'Dutch', 'pl': 'Polish', 'pt': 'Portugese', 'ro': 'Romanian', 'ru': 'Russian', 'sv': 'Swedish', 'th': 'Thai', 'tr': 'Turkish', 'zh': 'Chinese', 'vi':'Vietnamese', 'zh-sim': 'Chinese (Simplified)', 'zh-tra': 'Chinese (Traditional)'

- '

- Please refer to the CRAFT documentation and Tesseract documentation for further support.

Named Entity Recognition with Spacy.

- Given text data, tokenize and predict named entities within the text.

- Based on the spaCy library for Natural Language Processing in Python.

- In addition to an input column for the text, this model also takes an input for the language schema. The following language codes are accepted:

- '

en': 'English', 'es': 'Spanish', 'de': 'German', 'fr': 'French', 'it': 'Italian', 'pt': 'Portugese', 'nl': 'Dutch'

- '

- See the model documentation for more information.

Label Pixels in Images of People

- This model associates a class value (‘hair’—human hair, ‘skin’—bare skin, or ‘body’—clothed body) with a pixel in an image depicting a person. It can be used as part of a semantic segmentation workflow to pre-label images for human review.

- The underlying model is the HRNet semantic segmentation model trained on the "Look Into Person" dataset. The model works best on images that contain a single human subject, and may not perform well on images depicting multiple people.

- Please see the dataset documentation for more information.

Label Pixels for Objects

- Given an initial bounding box, generate a semantic segmentation mask for an object to differentiate it from background.

- Based on the OpenCV implementation of the GrabCut algorithm.

- Please see the model documentation for more information.

Label Bounding Boxes in Street Scene Images

- This model creates labeled bounding box predictions for objects in street scenes such as vehicles, pedestrians, signs, poles and obstacles. It can be used as part of an autonomous vehicle object detection workflow to pre-label images for human review.

- The underlying model is the Cascade R-CNN model trained on the Berkeley DeepDrive dataset.

- Please see the dataset documentation for more information.

- Download ontology