Once your job is finished, we recommend auditing the output dataset to get an estimation of the overall accuracy. This is extremely helpful in identifying what areas of your job are working well and what areas may need improvement. Typically, 30-50 rows is a large enough sample size to gain insight into your data’s quality; however, the more rows you audit, the more accurate your estimate will be. Below are step-by-step instructions to complete an audit.

Note: The following instructions are explained using OpenOffice, but these steps can be applied to Excel and Google Docs as well.

- Download the aggregated results file.

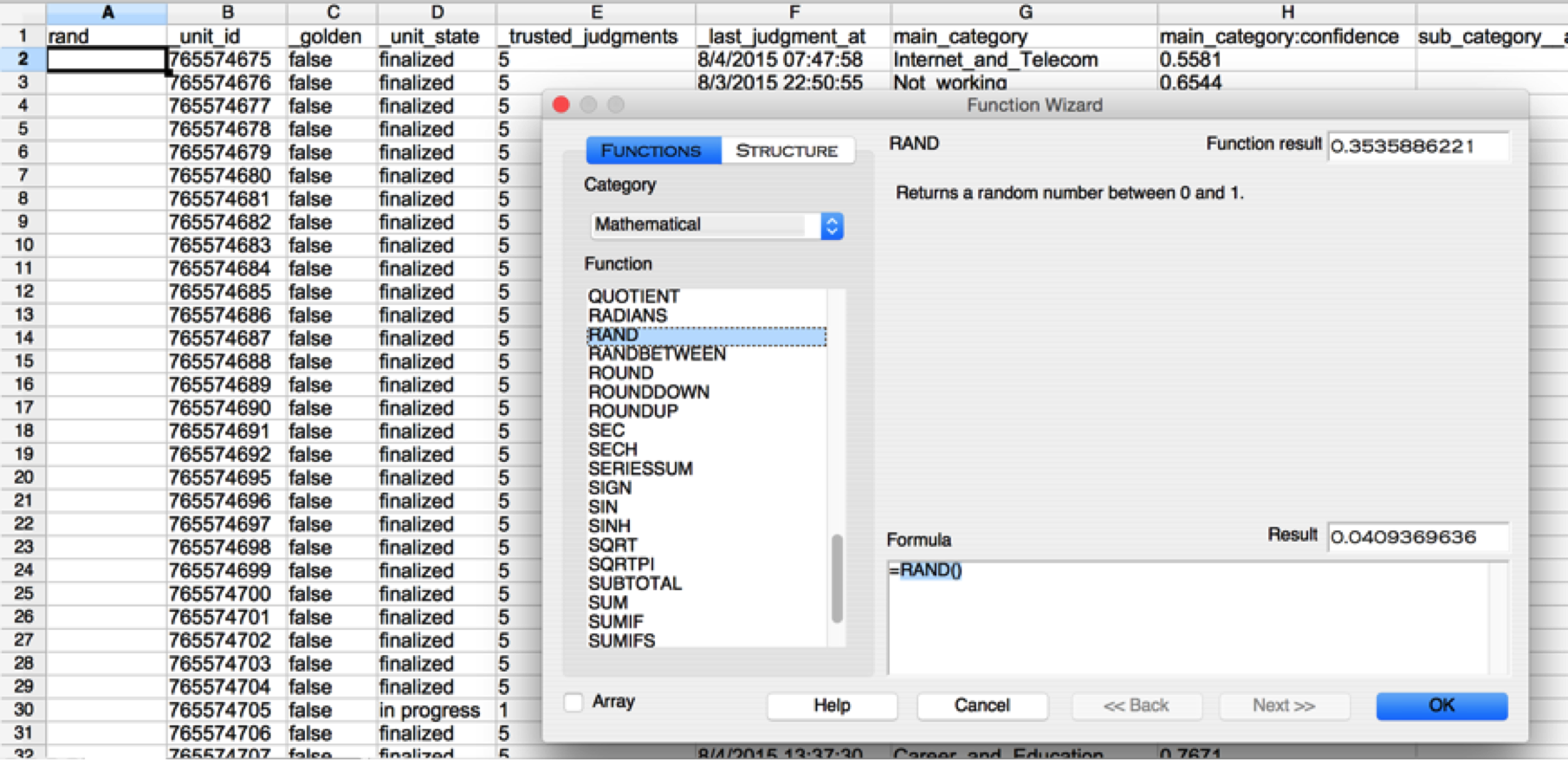

- Randomize the data.

Fig. 1: Randomize your data by using the RAND function to generate a random number for each row in the file.

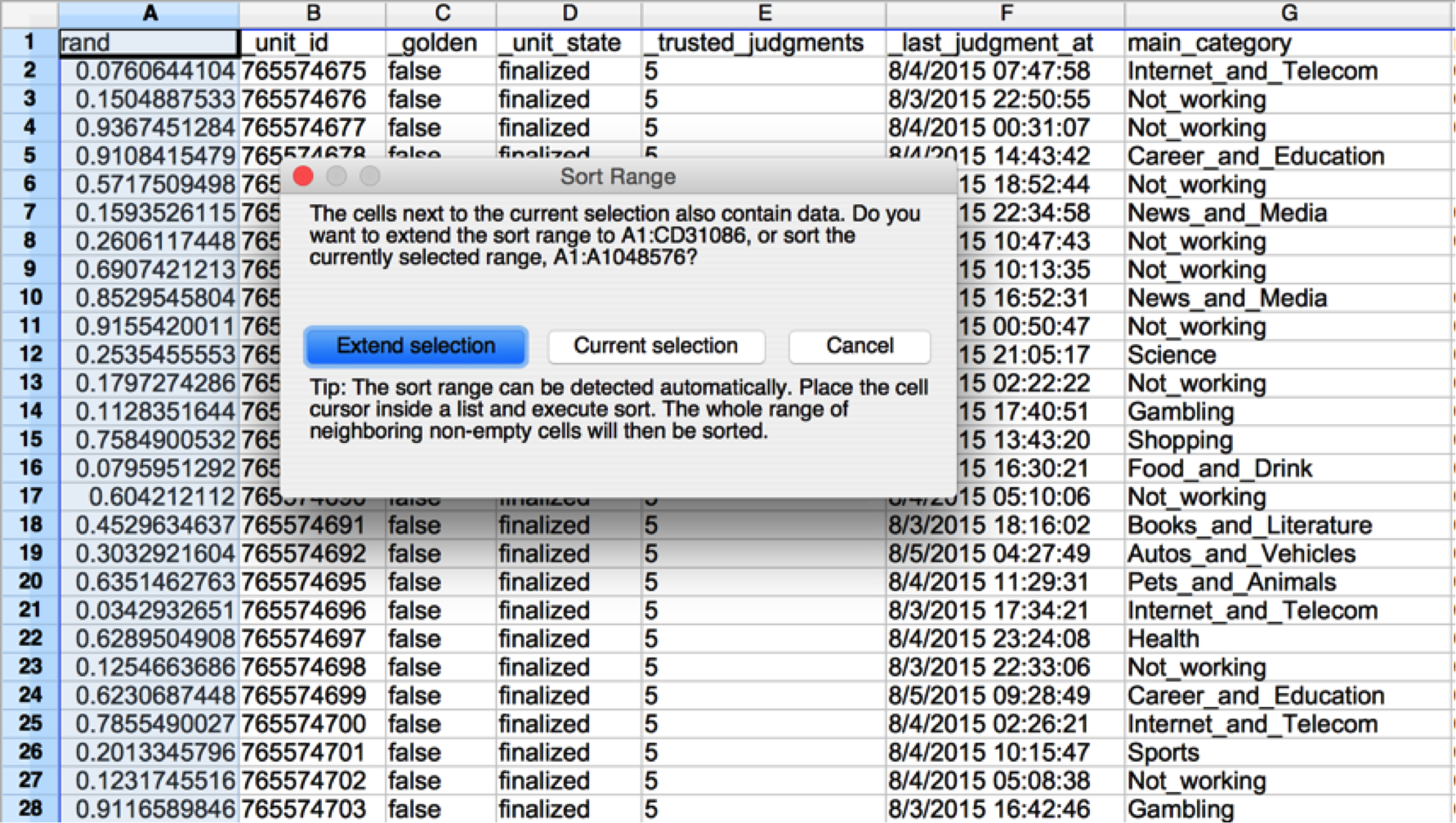

Fig. 1: Randomize your data by using the RAND function to generate a random number for each row in the file.  Fig. 2: Sort the file by the random number column, thus randomizing the data.

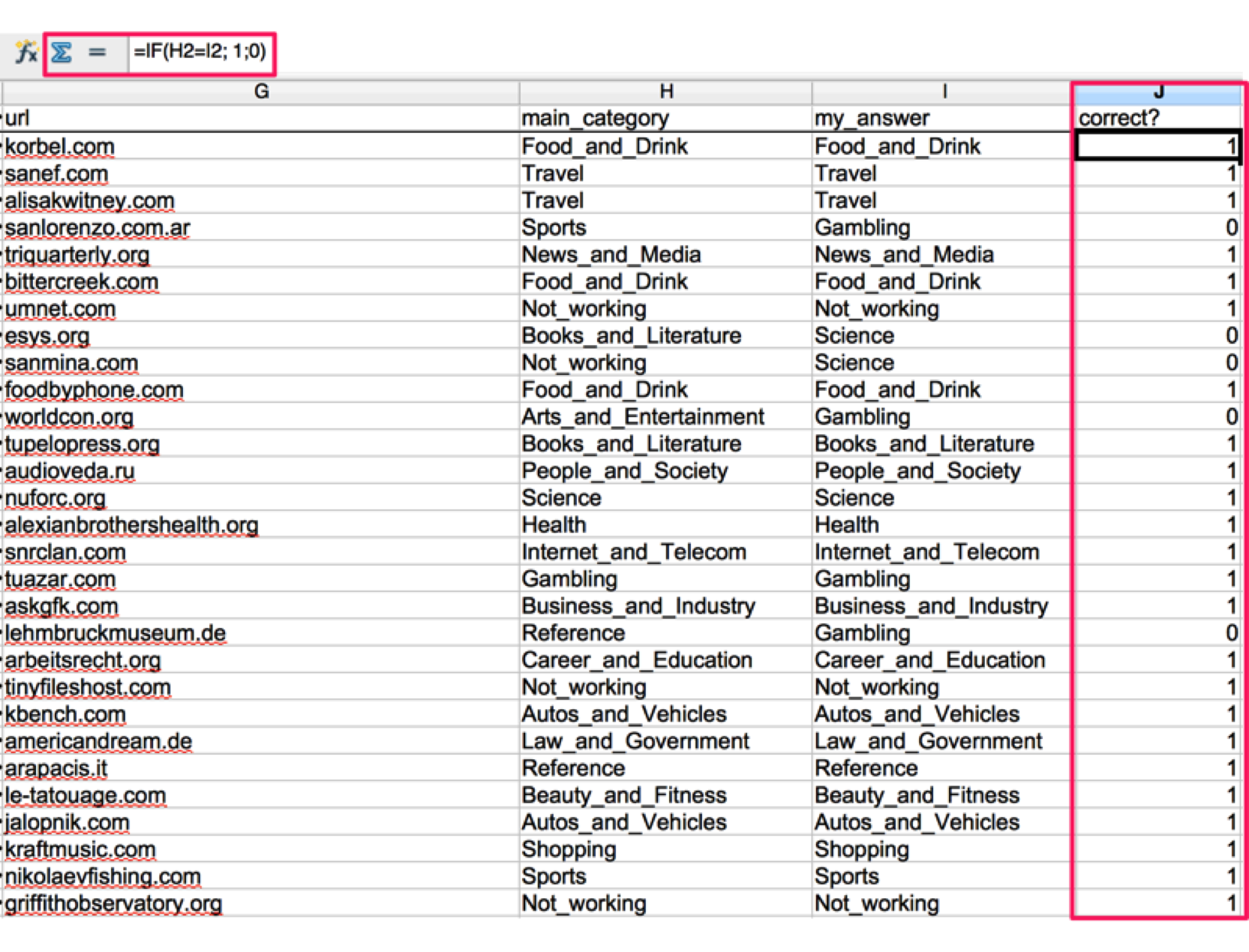

Fig. 2: Sort the file by the random number column, thus randomizing the data. - Add a column next to each answer column in the file. In this new column, enter your own answer for every row you audit.

Fig. 3: Column my_answer contains your answers to each question. The answers you choose should match the format from the output data.

Fig. 3: Column my_answer contains your answers to each question. The answers you choose should match the format from the output data. - Insert a column next to your answer column where you will check if your answers match those of the contributors.

Fig. 4: Column “correct?” uses a formula to determine if the contributors’ answer matches your own answer; enters 1 for a match and 0 for a mismatch. Please note if you are using Excel the formula will need to be =IF(H2=I2, 1,0).

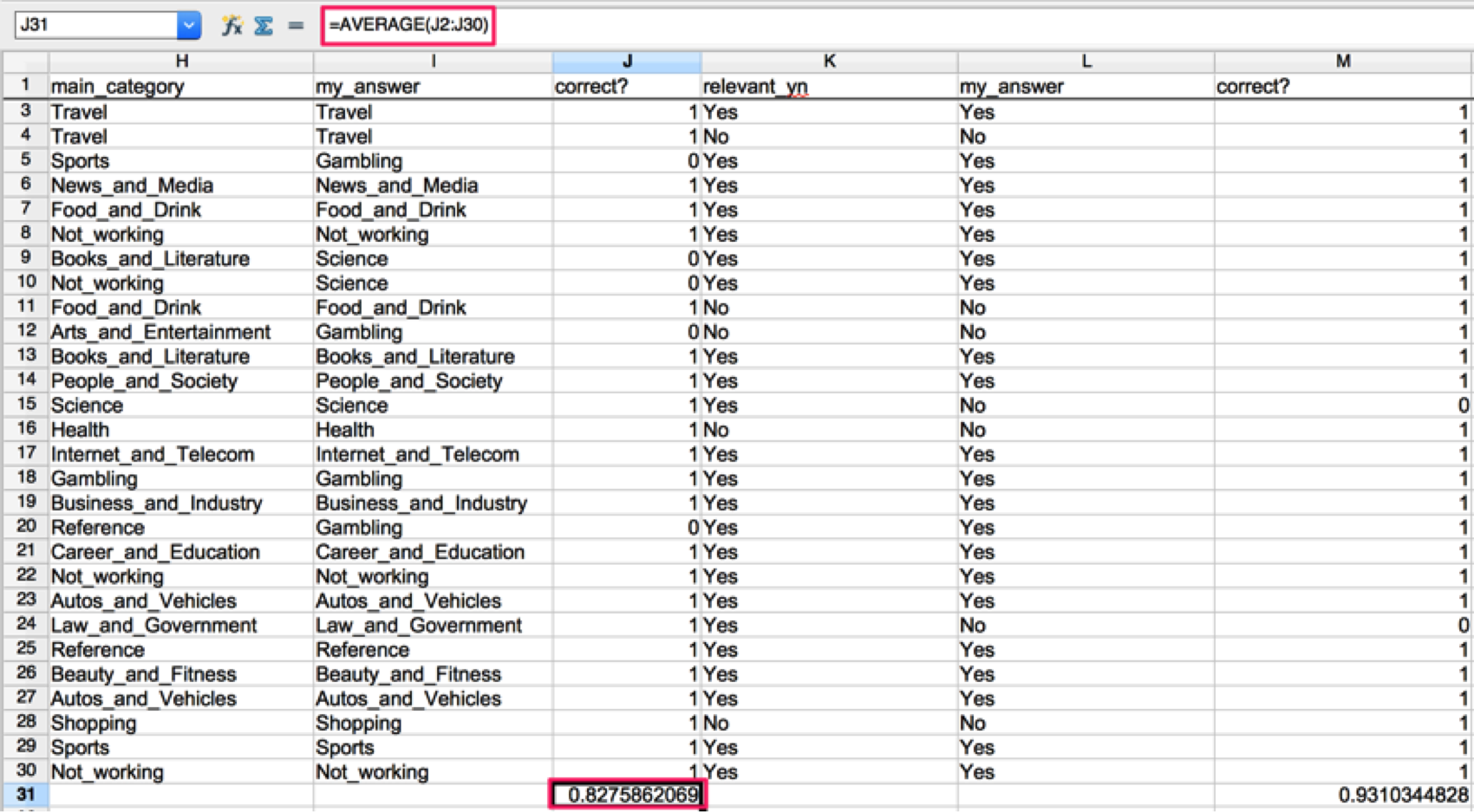

Fig. 4: Column “correct?” uses a formula to determine if the contributors’ answer matches your own answer; enters 1 for a match and 0 for a mismatch. Please note if you are using Excel the formula will need to be =IF(H2=I2, 1,0). - Calculate the accuracy percentage of the responses for each column / question.

Fig. 5: Average the answers (1s and 0s) in your “correct?” column to get the overall accuracy for each question.

Fig. 5: Average the answers (1s and 0s) in your “correct?” column to get the overall accuracy for each question. -

Use the audit results to identify what questions in your job are working well and what questions need improvement.

- In the example above, we can see ‘relevant_yn’ is high quality with an accuracy of 93%. However, ‘main_category’ is only 83% accurate, which indicates there are improvements we can make around that question.

- Once you’ve identified the question(s) you want to improve, try to identify any trends from the missed rows. In our case the categories ‘science’ and ‘gambling’ were consistently incorrect, indicating these categories may be confusing to contributors.

-

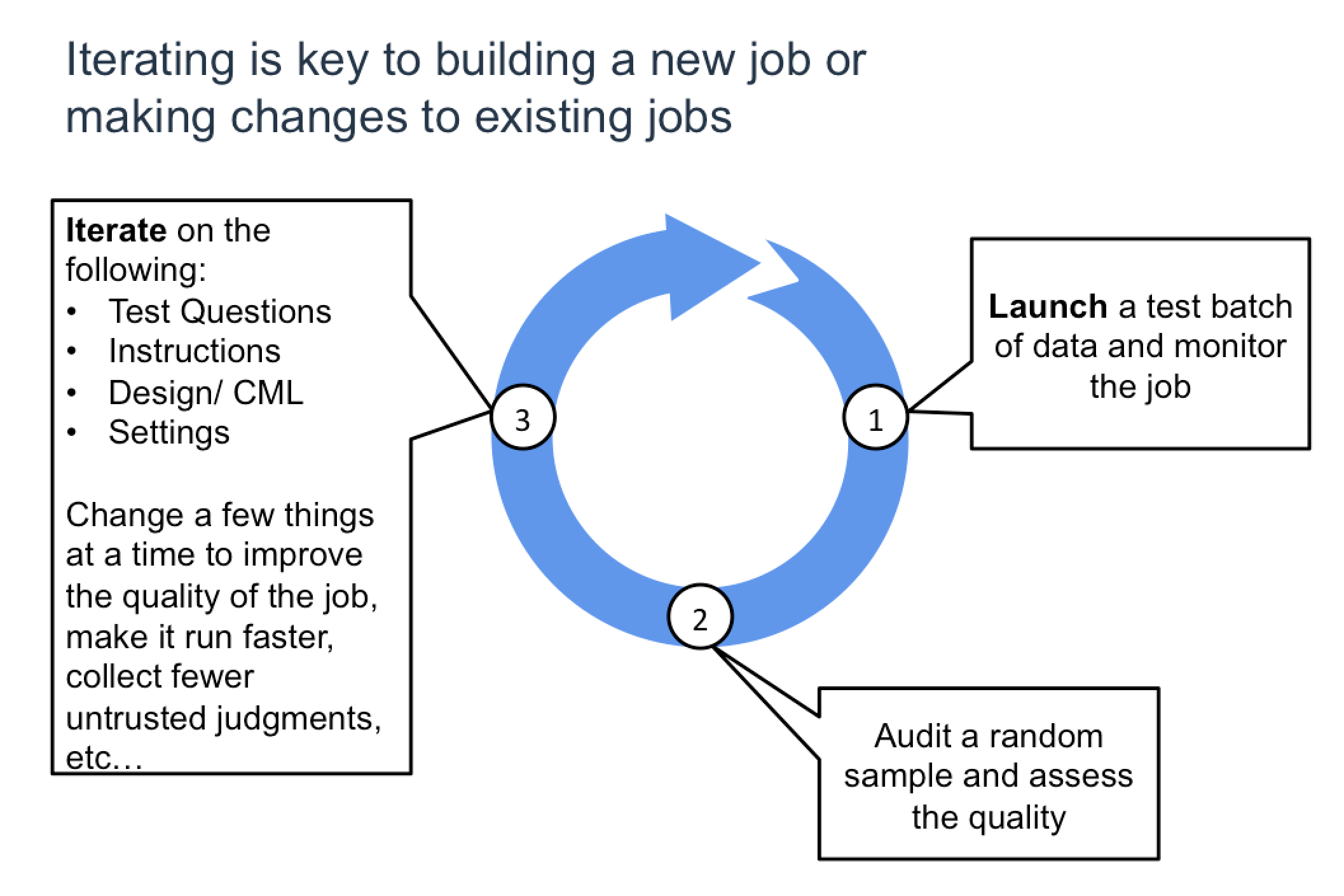

Improve the job design

- Using the problem areas / trends you identified, implement any changes necessary to the instructions, job design, and/or test questions. In our example, It will likely help to add specific examples around the ‘science’ and ‘gambling’ categories into the instructions to provide further clarification. Additionally, creating test questions that target these categories will provide further training for contributors and ensure their comprehension.

-

Launch the improved job and repeat above steps as needed

- Once you’ve implement your design improvements, launch a test run (100-500 rows) and conduct another audit to ensure your overall accuracy has improved. Repeat the steps above as necessary until your job’s overall accuracy is where you need it.

An in-platform Spotcheck feature is available in an invite-only beta for selected customers with a license. Contact your Customer Success Manager for more details.